Final Project: Description and Update

The goal of this project is to transform an object in 3D space onto a 2D image. After synthesizing an image that contains the projected object, I plan to add lines related to the depth of the projected object onto the image as well. This latter goal is inspired by the work of Jim Sanborn, who was listed on the course website.

Background:

The projection of an object onto a camera sensor depends on the properties of the camera, and the 3D position of the object relative to the camera. The algorithm must calculate the following: the position of the 3D object’s image on the sensor, the magnification of the object, and amount of blur of the object (this depends on the plane that the camera is focused and aperture of lens). The user specifies the following:

Background:

The projection of an object onto a camera sensor depends on the properties of the camera, and the 3D position of the object relative to the camera. The algorithm must calculate the following: the position of the 3D object’s image on the sensor, the magnification of the object, and amount of blur of the object (this depends on the plane that the camera is focused and aperture of lens). The user specifies the following:

|

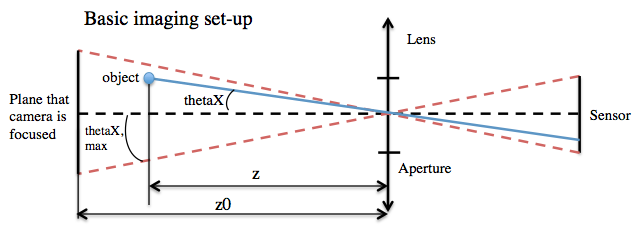

Xposition (or thetaX) – x position of object in 3D space relative to lens

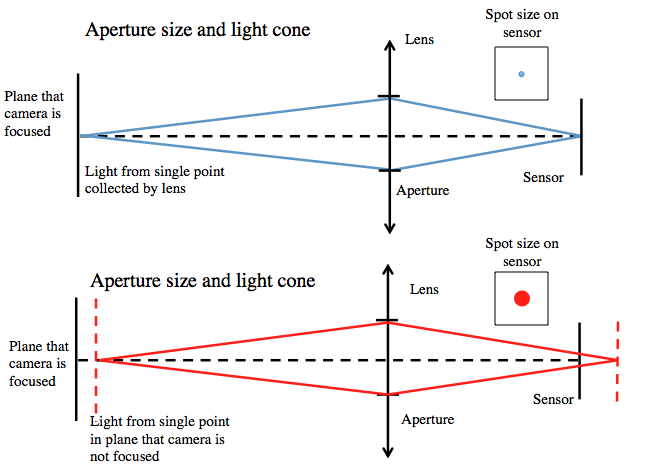

Xposition ( or thetaY) – y position of object in 3D space relative to lens z position – z position of object in 3D space relative to lens sensorX – size of sensor (horizontal) sensorY - size of sensor (vertical) aperture radius – radius of aperture stop in camera z0 – distance that camera is focused These figures show the geometry for determining where an object in space would be imaged onto a sensor. I am only showing the xz plane, but you can imagine extending this to the yz plane as well. As the aperture stop gets larger the amount of light collected by the camera increases. As a result the depth of field shrinks. Therefore, if the object is placed in a plane that the camera is not focused, then the spot size (blur of image) increases.

From this geometry, I wrote an algorithm for transforming an input image (the object) onto another image (the sensor). The image is blurred according to the size of the spot calculated, and then Poisson blending is used to blend the images together |

Preliminary Results

To test the algorithm, I set up a scene with plants positioned at different distances from the camera. A picture was then taken with a 50mm lens (f/#=1.8). The camera was focused at a position of 100mm (i.e. z0 = 100mm). I then selected an object (a picture of a butterfly) to be “imaged” by the camera using the algorithm. I chose several x,y,z positions to test. Here are some results. The max FOV given the sensor size and lens of camera was 6.7° by 4.5°

To test the algorithm, I set up a scene with plants positioned at different distances from the camera. A picture was then taken with a 50mm lens (f/#=1.8). The camera was focused at a position of 100mm (i.e. z0 = 100mm). I then selected an object (a picture of a butterfly) to be “imaged” by the camera using the algorithm. I chose several x,y,z positions to test. Here are some results. The max FOV given the sensor size and lens of camera was 6.7° by 4.5°

Plan for rest of project

Looks like the algorithm is working pretty well, but it also requires a lot of knowledge of camera properties. One of the most interesting parts of this project is how depth information is transformed onto the 2D plane. We talked about several approaches to determine depth in a scene during class this year, but most of those approaches required video as input data (time correlation and PCA). I'd like to try some of those approaches for determining depth in the scene, and then add lines around the object that were a function of depth, like the Sanborn "implied geometry series." The algorithm can also enable you to synthesize video of an object going through any trajectory in 3D space.

Looks like the algorithm is working pretty well, but it also requires a lot of knowledge of camera properties. One of the most interesting parts of this project is how depth information is transformed onto the 2D plane. We talked about several approaches to determine depth in a scene during class this year, but most of those approaches required video as input data (time correlation and PCA). I'd like to try some of those approaches for determining depth in the scene, and then add lines around the object that were a function of depth, like the Sanborn "implied geometry series." The algorithm can also enable you to synthesize video of an object going through any trajectory in 3D space.